Forward

Linux containers (LXCs) are rapidly becoming the new "unit of deployment" changing how we develop, package, deploy and manage applications at all scales (from test / dev to production service ready environments). This application life cycle transformation also enables fluidity to once frictional use cases in a traditional hypervisor Virtual Machine (VM) environment. For example, developing applications in virtual environments and seamlessly "migrating" to bare metal for production. Not only do containers simplify the workflow and life cycle of application development / deployment, but they also provide performance and density benefits which cannot be overlooked.At the forefront of Linux Container tooling we have docker -- a LXC framework / runtime which abstracts out various aspects of the underlying realization by providing a pluggable architecture supporting various storage types, LXC engines / providers, etc.. In addition to making LXC dead easy and fun, docker also brings a set of capabilities to the table which make containers more productive including; automated builds (make files for LXC images), versioning support, fully featured REST API + CLI, the notion of image repositories and more.

Before going any further, let me reiterate some of the major benefits of Linux Containers from a docker perspective:

- Fast

- Runtime performance at near bare metal speeds (typically 97+ percent or bare metal -- a few ticks shaven off for bean counters).

- Management operations (boot, stop, start, reboot, etc.) in seconds or milliseconds.

- Agile

- VM-like agility -- it's still "virtualization".

- Seamlessly move between virtual and bare metal environments permitting new development workflows which reduce costs (e.g. develop on VMs and move to bare metal in the "click of a button" for production).

- Flexible

- Containerize a "system" (OS less the kernel).

- Containerize "application(s)".

- Lightweight

- Just enough Operating System (JeOS); include only what you need reducing image and container bloat.

- Minimal per container penalty which equates to greater density and hence greater returns on existing assets -- imagine packing 100s or 1000s of containers on a single host node.

- Inexpensive

- Open source -- free -- lower TCO.

- Supported with out-of-the-box modern Linux kernels.

- Ecosystem

- Growing in popularity -- just checkout the google trends for docker or LXC.

- Vibrant community and numerous 3rd party applications (1000s of prebuilt images on docker index and 100s of open source apps on github or other public sources).

- Cloudy

- Various Cloud management frameworks provide support for creating and managing Linux Containers -- including OpenStack my personal favorite.

Using google-foo (searching), it's fairly easy to find existing information describing the docker LXC workflow, portability, etc.. However to date I haven't seen many data points illustrating the Cloudy operational benefits of LXC or the density potential gained by using a LXC technology vs. a traditional VM. As a result I decided to provide some Cloudy benchmarking using OpenStack with docker LXC and KVM -- the topic of this post and a recent presentation I posted to slideshare.

Update 05/11/2014

The benchmarks have been rerun and v2.0 of the results is now available on slideshare (embedded below). An overview of the revision changes are available in the presentation and copied below for your convenience:- All tests were re-run using a single docker image throughout the tests (see my Dockerfile).

- As the result of an astute reader, the 15 VM serial “packing” test reflects VM boot overhead rather than steady-state; this version clarifies such claims.

- A new Cloudy test was added to better understand steady-state CPU.

- Rather than presenting direct claims of density, raw data and graphs are presented to let the reader draw their own conclusions.

- Additional “in the guest” tests were performed including blogbench.

Please read the FAQ and disclaimer in the presentation.

OpenStack benchmarking with docker LXC

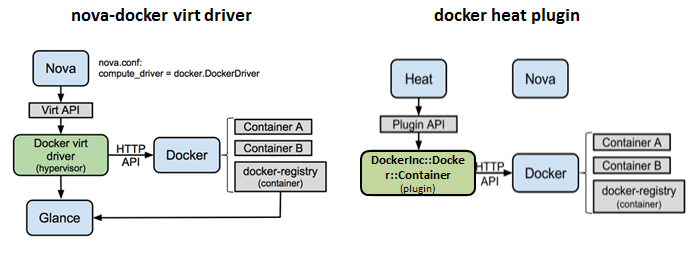

As luck would have it my favorite Cloud framework, OpenStack, provides some level of integration with docker LXC. Specifically there has been a nova virt driver for docker LXC (which includes a glance translator to support docker based images) since the Havana time-frame and now in Icehouse we have heat integration via a plugin for docker. The diagrams below depict the high level architecture of these components in OpenStack (courtesy of docker presentations).Rather than taking a generic approach to the Cloudy benchmarks, I set out with a specific high level use case in mind -- "As an OpenStack Cloud user, I want a Ubuntu based VM with MySQL" -- and sought to answer the question "why would I choose docker LXC vs KVM". Honing the approach in this fashion allowed me to focus in on a more constrained set of parameters and in particular to use a MySQL enabled VM / Container for the tests.

To drive the Cloudy tests from an OpenStack perspective, OpenStack project rally fits the bill perfectly. Rally allowed me to drive the OpenStack operations in a consistent, automated fashion while collecting operational times from a user point of view. Thus, rally was used to drive 3 sets of tests:

- Steady state packing of 15 VMs on a single compute node (KVM vs. docker LXC).

- Serial packing of 15 VMs on a single compute node (KVM vs. docker LXC).

- Serial soft reboot of VMs on a single compute node (KVM vs docker LXC).

- VM boot and snapshot the VM to image (KVM vs docker LXC).

While rally was used to drive the tests through OpenStack and collect Cloudy operational times, additional metrics were needed from a compute node perspective to gain incite as to the resource usage during the Cloudy operations. I used dstat to collect various system resource metrics at 1 second intervals (in CSV format) while the Cloudy benchmarks were running. These metrics where then imported into a spread sheet where they could be analyzed and graphed. The result was a baseline set of data reflecting the average operational times through OpenStack as well as compute node resource metrics collected during those tests.

The presentation in full can be found here:

I've also made the raw data results available below on github. Feel free to use them however you see fit. Note that I didn't have the bandwidth to format / label all the data clearly so if you have questions let me know and I'll do my best to describe / update.

Note that although the performance and density factor look promising using the docker integration in OpenStack, these components are still under heavy development and thus lack a full set of feature parity. I believe we will see these gaps closed moving forward, so I would encourage you to put on your python hat and help out if you can.

Finally, I will be discussing these results as well as providing a quick overview on realizing linux containers at some upcoming conferences:

If you attend either of these conferences I would encourage you to stop by my sessions and chat containers.